How to make your awful technical SEO audit better

Ok well maybe it's not awful. I haven't actually seen yours. But boy there are a lot of terrible tech audits out there and someone is writing them

I certainly was if we cut back 3 or 4 years ago. It's not that my technical knowledge was bad, but rather that I was missing all the skills around the side, which made audits just... not very good.

Thankfully it's not just me, pretty much everyone makes these mistakes when they start so let's unpick some of them and move together towards better work.

When will this post will be useful to you: It should be an excellent primer to read before you start and a helpful common mistakes list to refer to when trying to edit your own work.

What this post isn't: It doesn't give you the blow by blow of how to do a technical audit. It also doesn't have a neat checklist of tasks to complete (I'll refer you to my colleague Ben Estes for his good checklist).

Let's get started

Let's briefly define what bad is.

A technical audit is trying to:

- Find the technical problems with a website.

- Help a company solve those technical problems so they get more traffic.

So if we fail to do either of those things, we've done a bad job. It's usually the second that get's people.

How do we fail at those two things?

-

Problem not fixed:

- Fail to persuade: The problem doesn't get fixed because we're not persuasive. If we can't convince someone the problem is important it won't get solved.

- Fail to get done: This one is a bit of a catch all. We persuade someone a problem is important, but due to a number of reasons it still falls through the cracks anyway.

- Problem fixed, no results: We fix the problems, but we can't show results.

A good technical audit can't gurantee you won't still have these problems, but a bad one can absolutely cause them.

Now we know our problems, lets jump into some of the common mistakes.

Mistake 1: Trying to do everything in one document

What's the problem?

One document as the output of a tech audit.

It's really hard to make one document to accomplish two very different things.

If we just have a Word/Google Doc, that succinctly explains a problem to devs, it probably won't be good for persuading people (we'll fail to persuade) and vice versa (in that case we'd fail to get done).

What should you do?

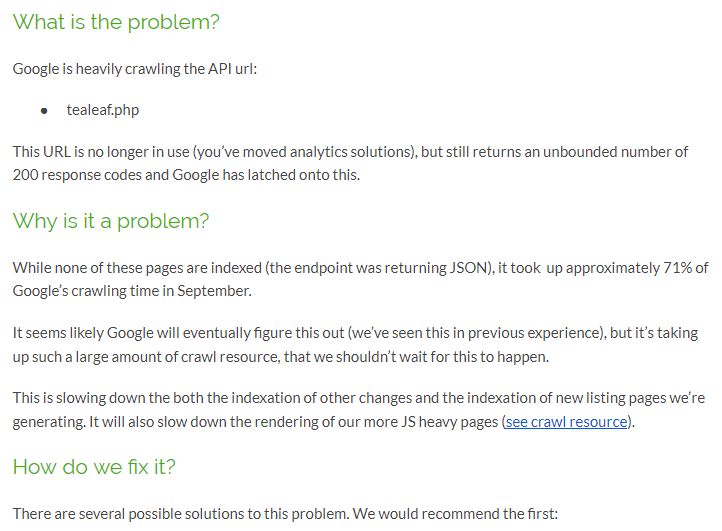

Usually I end up with 2 main documents.

- A presentation to walk people through the results. This is where I'll persuade. Ideally I'll do it in person, if I can't I'll try and do it on a video call.

- A document which contains the details lacking from the presentation. I'll try and write the answers to problems so they're as copy and pastable into dev tickets as possible. This is where I'm trying to make the job as easy and as practical as possible to get done.

An example of a presentation slide and the equivalent document sections

(And then a spreadsheet which contains any other resources.)

Sidebar: Why a presentation for persuading people?

I'm sure we've all sat through our fair share of dreadful Powerpoints. You might be objecting loudly to my suggestion of a presentation.

Bad Powerpoints are bad. No doubt. But if you can do it well (a subject which can (and probably will) fill another blog post), the format has a lot of good aspects to it:

- You can pull all the relevant people into a room together, you know they've all actually seen it.

- You can catch any big objections, know they exist and maybe even try to solve them then and there.

- Having some actively present is usually far more engaging than just reading a document.

- Your knowledge is far larger than the document, you can answer all the questions they have that your document might not include.

Get all the involved parties you need to succeed (developers, product owners etc.) and pull them into a room.

If there a lot of them or parts of your audit are only relevant to a certain audience, split it into sections to be respectful of time.

Present to them, answer immediate questions, catch big objections that people might have.

Mistake 2: Providing symptoms not the root cause

What's the problem?

When you provide the symptoms not the root cause, you don't actually make anyone's job easier (fail to get done) and you show a lack of knowledge about websites which makes harder to persuade (fail to persuade).

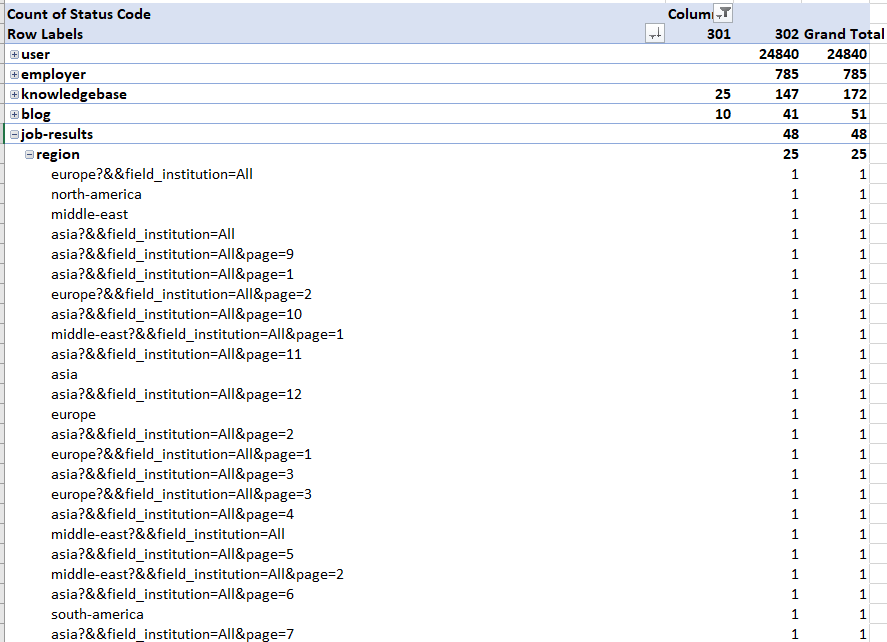

Here are two really common sentences to see in a tech audit:

- You have 3,451 titles which are over max length.

- You have 20,004 internal links which are 302 redirecting.

These statements are both technically true, but they miss something crucial about websites. Websites aren't built as 3,451 custom pages. They're built with templates.

It's far more likely there are 1 or 2 templated problems here, not 3,451. Here's a better version:

-

You have 3,451 titles which are over max length. There are 3 issues causing this:

- Your category pages append the brand twice to the title tag. (2,692 : 78%).

- Your blog pages are adding the category onto the title. (709 : 21%)

- Your resources pages haven't been correctly optimised. There are 50 pages to be re-written.

-

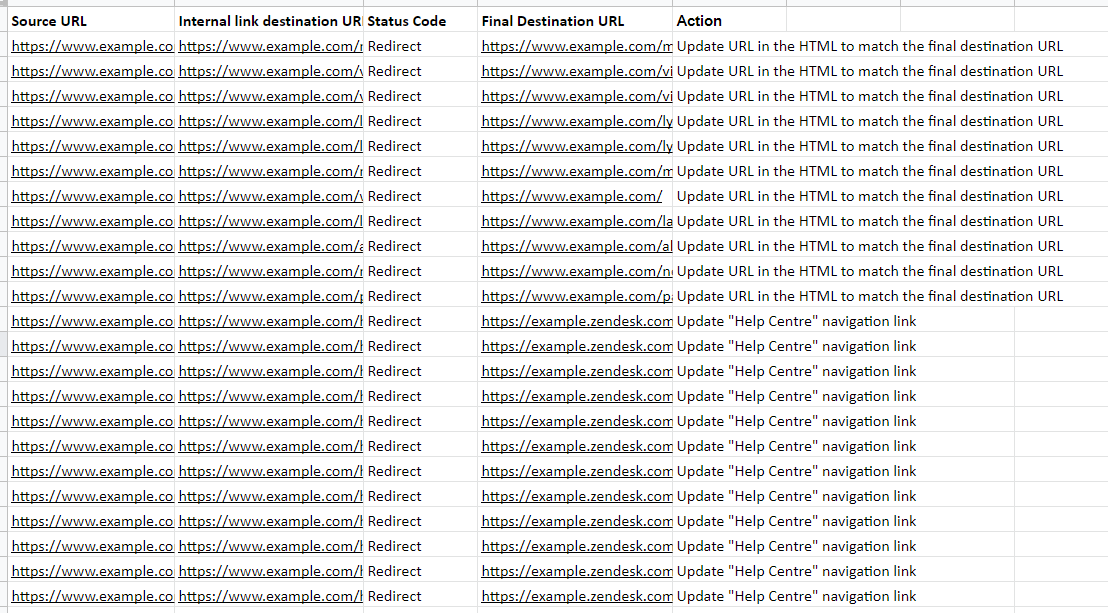

You have 20,004 internal links which are 302 redirecting. There are two issues causing this:

- You have 4 links in the main nav (see here), which are HTTP not HTTPS.

- Your HTTP protocol 302 redirects to your HTTPS protocol.

We want to give people the root causes to fix, not the symptoms.

What should you do?

You're probably beginning your audit by running a crawl. Your crawl tool then will give you back lots of problems with numbers attached to them.

Your job is to take those numbers of 100s and 1000s, find the patterns and turn them them into a couple root problems.

Until we all get replaced by our mighty robot overlords, pattern matching is something humans are really good at. But some knowledge about how websites are built might help you here rather than trawling through spreadsheets aimlessly.

How are websites built?

Websites are usually built with a series of templates & associated rules. A problem that exists on one page of the template will probably exist on others.

- When you find a problem check if it appears on other URLs in the same template.

But it does get slightly more complex than that.

There is probably a top level template for a web page, then every distinct element on the page will also have separate templates.

For example that SEO text footer has a template, the related articles block has a template. etc. etc.

- When you find a problem try to find the most specific template. That probably isn't page level. If the problem is on every blog page, but it's in the related articles block, then the problem is with that specific template.

A quick Excel trick

If you're struggling to spot them, a neat Excel trick to help you find them.

- Take your URL column. Copy and paste it into a new column.

- Find and replace the domain and first slash

- Split the column by

/and then you have folder paths. - Put the data into a pivot table so you can easily see the breakdown by folder.

Templates you might miss

Finally there may be "hidden" templates. You're probably thinking of templates being pages that look the same, but they don't have to be. Remember we're just looking for patterns, let's take category pages as an example.

They might have:

- A template & set of rules for pages with products.

- A template & set of rules for pages without products.

Both pages look the same, but one might canonical to the root category. There are two different rules, even though there is one visual template.

Some example hidden templates that often have associated logic

-

Category/SRP pages:

-

Items:

- With items

- Without items

-

Location levels:

- Country level

- City level

- Postcode level etc.

-

-

Product pages:

-

Expiration

- Expired

- Not expired

-

Stock levels

- Out of stock

- In stock

-

-

Forum posts

-

Number of comments

- Threads with no replies

- Threads without replies

-

You get the idea.

Your 10 second sanity check

Anytime you find yourself referencing a long list of URLs... that's usually a sign that you've missed a pattern.

Mistake 3: Not making a choice

What's the problem?

You've provided multiple answers with pros and cons, but no choice.

You've just handed the decision burden entirely onto them, which is almost certainly not what they wanted.

What should you do?

Pick one!

Option 1 - You know both impact & difficulty

C'mon you totally could've picked one? Stop putting it off and choose one.

Option 2 - You know impact, but have no idea about difficulty

If you don't you can just pick the best one for search (see 4) and get ready for compromise (see 5).

(Although take a look at mistake 5 and see if you can take a guess at difficulty)

Option 3a - You don't know difficulty or impact

We know nothing. This is surprisingly common.

Tell them to test it.

Testing is good and honesty is better than lying.

Option 3b - You don't know difficulty or impact

What if you can't test it? Boy you sure have a lot of objections.

Well you're gonna have to take a punt at difficulty (you could also roll a dice). You could do that from a development (last time your dev team took forever to change titles) or an organisational point of view (perhaps brand will hate one more than the other).

Finally tell them how you've made the decision.

Option 4 - One of your options is super risky

Don't know their appetite for risk?

Maybe they love betting it all on black. Perhaps they call it a night when they're up a dollar. You have no idea.

Eugh an exception to the rule. This is actually one of the few times it's ok to punt back to someone.

Mistake 4: No prioritisation (or bad prioritisation)

What's the problem?

In tech audits we often ask for too many things and don't prioritise.

Actually we tend to do this all the time, SEO departments will fill up a JIRA with endless tickets that are wrong with the website and prioritisation.

We end up being de-valued and find it hard to get things done.

Why do we do this? IMO It's a combination of a couple things, both human & the nature of search:

- We spend our time close to our problems. That makes them feel important & we want to report them. It's easy to forget the largest business context. (Particularly when you're only KPI'd on one thing.)

- Unfortunately our problems, unlike other problems are often hard to measure (and accompanied by hand waving and midnight rituals). That makes them hard for us/the business to value.

- This hard to measure thing, has also led to all sorts of superstition about what Google does. We often flag anything that could possibly be an issue to make sure we've covered our backs when the next update hits.

- Our work can also take a long time to see fruit. While there are some changes that are faster (I found titles & metas for most large sites were picked up in under a week), there are plenty more that won't show success in an immediate way.

These all combine in fun ways. The end result is the SEO department ends up in the organisational dog house, known for crying wolf and making constant demands without proof. It can happen long before you arrive in a company and hang around for a long time.

It makes it hard for our tech audit to succeed.

What should you do?

Every item in our tech audit should be prioritised. We need a table/list with every action, sorted by impact and ideally also effort (see mistake 5).

What can we use to prioritise impact?

-

Gut.

- Not the most compelling way, but at least we're trying.

-

Previous experience

- Arguably this is just gut, but we actually remember what happened rather than it being a vague feeling.

-

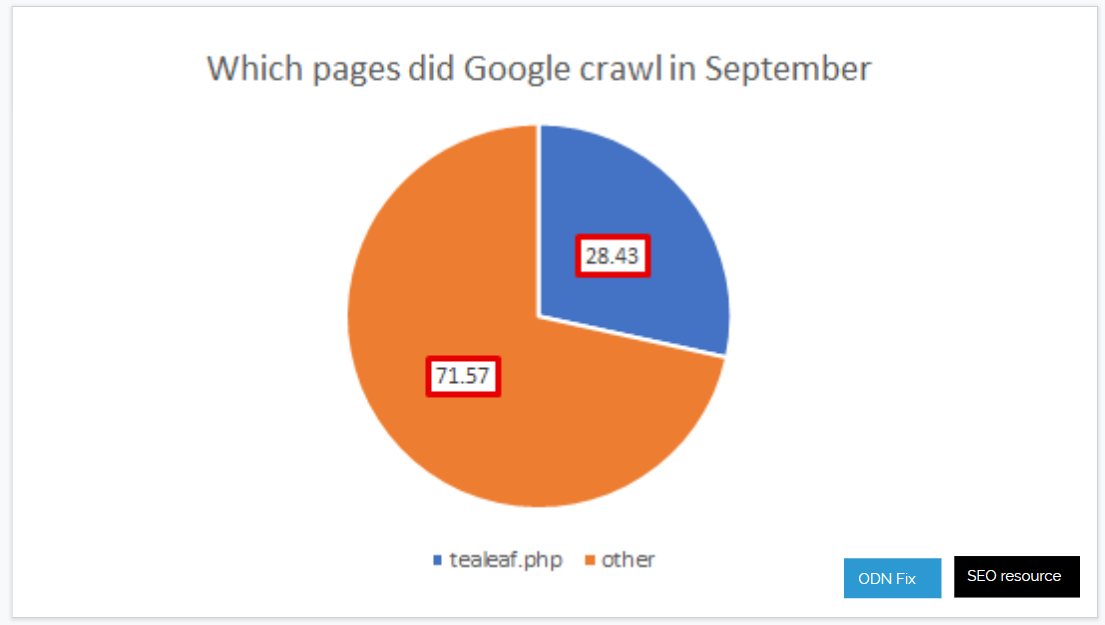

Logs.

- Particularly for crawling problems, this gives exact numbers on how much time Google is spending doing different things. Is that 302 redirect chain from your language switcher a big deal? Your logs will help you figure that out.

-

Traffic, sessions or revenue.

- While we might not be able to estimate the exact impact, we can at least know how important the pages we're altering are.

What can we use to prioritise effort?

- We're about to dig into this in the next section. (Jump to 5, if you pass go, collect 200.)

Should we even include everything?

If our document is getting super long, it's time to take a long hard look at our really unimportant items. Should we even both including them?

My personal preference is often to leave out. Check this one with whoever is getting the report however. If someone is paying for a standalone audit, they often do want every tiny thing, even if it doesn't end up ever getting fixed.

My best friend here is the appendix. Nothing says this doesn't matter like an appendix.

Mistake 5: Not being ready for compromise

What's the problem?

"Here's what should you do"

"We can't do that."

"Well you should. If you want to win this is what you need to do... Good luck!"As good as our solutions are they probably won't survive impact with the rest of business. They won't just let us put all the links on the homepage, or remove all tabs because Google de-values content inside them (I know Gary says it doesn't, all our tests are saying it does, feel free to fence sit).

We have to talk to other humans. And they're the worst.

Too many tech audits just dump a bunch of recommendations and spend no time considering all the arguments they're going to cause and if they're at all practical (fail to get done|fail to persuade).

This is at some level an extension of our previous point: prioritisation. We need to prioritise based on effort and not just return.

What should you do?

We need to:

- Prioritise based on return and effort.

- Prepare compromises where we think it's unlikely that our first version gets through.

This is never easy, a lot of it is experience, but I'll do my best to try and provide some guidelines.

How can we anticipate effort?

There are two big sources of effort and we'll look at both of them.

- Development work.

- Other people/departments.

Anticipating other department problems

- Know all the other departments KPIs.

- Look at what all the other departments are changing. If they're changing something often, chances are they care about.

- Look at what the other departments are responsible for. if you're changing their thing they're probably going to care.

- Find out which department has the highest headcount. It's often a decent proxy for budget and how much an organisation cares about something. A brand department three times the size of everything else means you'll be running into them at every corner.

Anticipating how much development time it is

Welcome to the crapshoot. Take two websites I've worked with:

Website A:

- Changing titles was far easier than changing the results their internal search engine returns.

Website B:

- It was easier to change the number of products on a category page than the title.

There's a lot of subtlety to this, even dev's struggle with it and there can be so much hidden complexity & legacy in a company, it's very easy to be wrong and sound like an idiot.

So what can you do?

- Talk to everyone you can. The longer someone has worked for a company the better the feel they'll usually have.

- Even better: Get to know a dev as you're working through the audit. You can run the occasional thing quickly past them and sometimes they'll be able to give you a feel for difficulty.

- Take a guess, then when you've been wrong after the fact, go back fix the numbers for yourself and start building that experience.

Some common blockers

Here's a random list of blockers for inspiration:

- General: Anything visual on page might trigger brand. Particularly in ecommerce.

- General: Often a new sub-domain means that part of the website is built on something different. The difficulty of changing this area will probably be different to others.

- General: Run BuiltWith on sections that look notably different to see if they're built on different tech. If they are then the difficulty of changing this area will probably be different to others.

- General: Bits of website functionality that aren't core to the websites experience (e.g. a community forum) have a good chance of being harder to change. (Because they're often on externally hosted platforms with no dev resource).

- Ecommerce: Anything related to product management e.g. category page creation, expired products might have to go through merchandising.

Thoughts?

As always I'll leave it with asking for your thoughts. I've probably missed some things but wanted to get this out the door.

Discussion in dem comments.